Challenges for Data-driven Natural Language Analysis beyond Standard Data

Workshop Description

Recent advances in empirical natural language processing and machine learning have coincided with the wide availability of new resources, such as social media data, learner or code-switching data, historical data, speech data, among many others. While most traditional tasks in natural language processing rely on carefully curated and linguistically annotated data, this newer generation of "non-standard" data is often noisier and resists traditional assumptions about language use and data annotation. The goal of this workshop is to bring together researchers working on non-standard data from a variety of angles, ranging from issues about annotation standards and methodology to linguistic issues and issues in machine learning and experimentation.

Invited Speakers

- Stefanie Dipper (Ruhr-Universität Bochum)

- Chris Dyer (Carnegie Mellon University/Google DeepMind)

- Pascale Fung (Hong Kong University of Science and Technology)

- David Jurgens (Stanford University)

- Djamé Seddah (University of Paris-Sorbonne)

- Anders Søgaard (University of Copenhagen)

Dates and Location

When: October 13-14 2016.

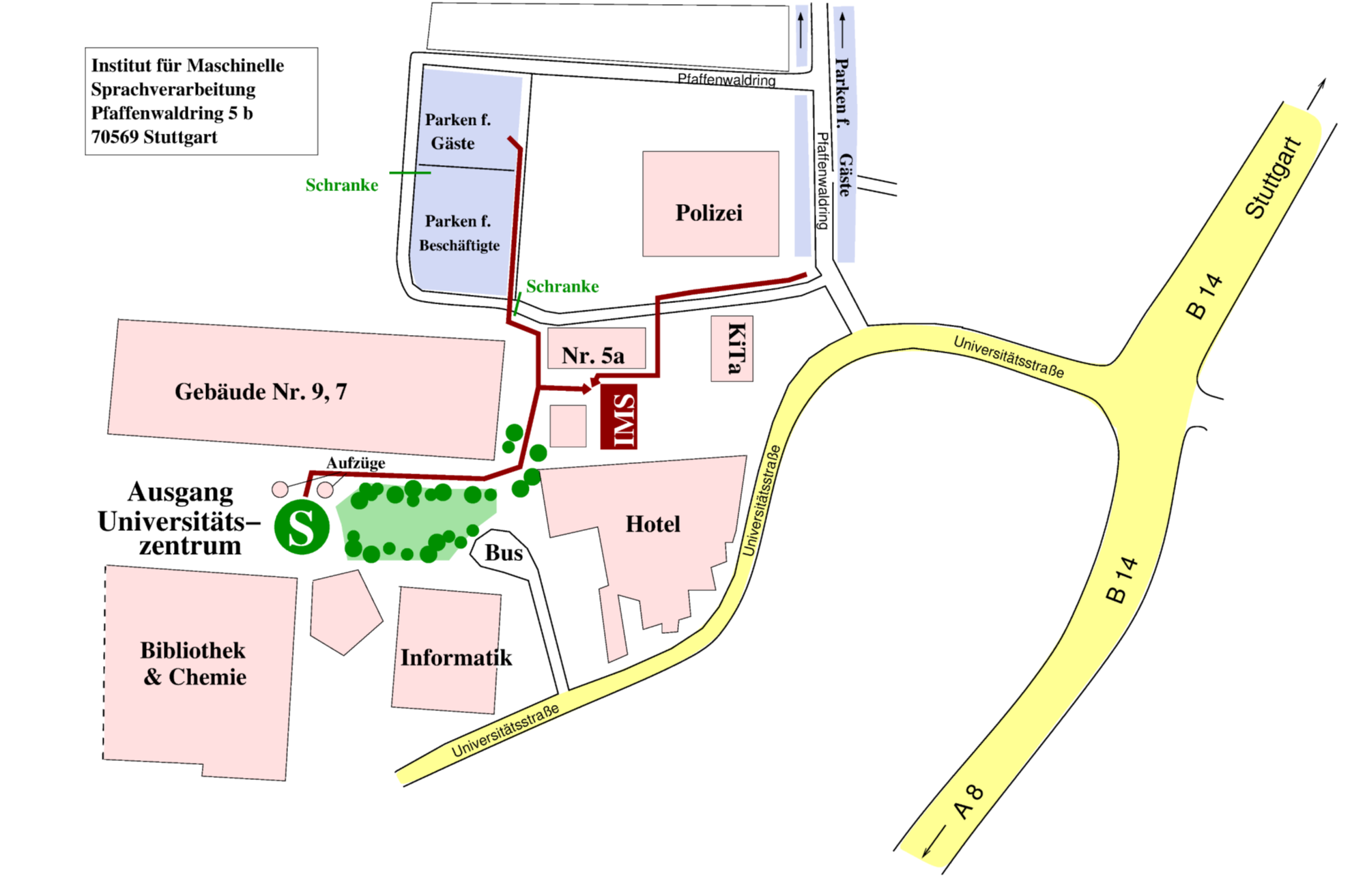

Where: IMS building (Pfaffenwalding 5b, Stuttgart 70569), campus "Vaihingen" -- University of Stuttgart. See section "Maps" for more information.

Program

Thursday, October 13.

9:30-10:00 Opening session

10:00-11:00 Invited Talk: Anders Søgaard. How far can we get with multi-task learning?

11:00-11:30 Coffee break

11:30-12:30 Invited Talk: Djamé Seddah. Coping with the Jabberwocky Syndrome: Morpho-Syntactic Analysis in Hostile Environment

12:30-13:00 SFB Project Teaser Talks

13:00-14:30 Lunch break

14:30-16:00 SFB Poster Session

16:00-16:30 Coffee break

16:30-17:30 Invited Talk: Pascale Fung. The Challenges of Emotion Speech Database Collection

19:00 Workshop dinner at Trollinger Stubn

Friday, October 14.

09:30-10:30 Invited Talk: Stefanie Dipper. Creating and processing historical corpora: spelling variation and other challenges

10:30-11:00 Coffee break

11:00-12:00 Invited Talk: David Jurgens. Observing cultural exchange across linguistic and geographic boundaries in social media

12:00-13:30 Lunch break

13:30-14:30 Invited Talk: Chris Dyer. User-generated text: opportunities and models

14:30-15:00 Coffee break

15:00-16:30 Panel Discussion

16:30-17:00 Closing remarks

Abstracts

Anders Søgaard. How far can we get with multi-task learning?

Multi-task learning has been proposed in robust learning, weakly supervised learning, domain adaptation, and cross-lingual adaptation scenarios. While researchers have presented positive results, it is not clear when and by how much multi-task learning can improve the performance of state-of-the-art NLP models. We review recent work, focusing on multi-task learning as a regularization strategy. Important questions are: Does multi-task learning lead to higher inductive bias in practice? Can multi-task learning reduce the need for labeled data? When is an auxiliary task relevant for a target task? Is there an upper bound on how far we can get with multi-task learning? I do not promise to answer all of these questions, but at least now they’ve been asked.

Djamé Seddah. Coping with the Jabberwocky Syndrome: Morpho-Syntactic Analysis in Hostile Environment

In recent years, statistical parsers have reached high performance levels on well-edited texts. Domain adaptation techniques have improved parsing results on text genres differing from the journalistic data most parsers are trained on. However, such corpora usually comply with standard linguistic, spelling and typographic conventions. In the meantime, the emergence of new media of communication has caused the apparition of new types of online textual data. Although valuable, e.g., in terms of data mining and sentiment analysis, such user-generated content rarely complies with standard conventions: they are noisy. This prevents most NLP tools, especially treebank-based parsers, from performing well on such data. For this reason, we have developed the French Social Media Bank, the first user-generated content treebank for French, a morphologically richer language (MRL) than English. The last release of this resource contains 3700 sentences from various social media sources, including data specifically chosen for their high noisiness.

We describe here how we created this treebank and expose the methodology we used for fully annotating it. We also provide baseline POS tagging and statistical constituency parsing results, which are lower by far than usual results on edited texts. This highlights the high difficulty of automatically processing such noisy data in a MRL. In this talk, we will also detail the cost of building such resources and the difficulties of maintaining a long standing effort over a few years.

Pascale Fung. The Challenges of Emotion Speech Database Collection

We present and discuss an ongoing data collection and annotation effort to build a large corpus on speech emotion detection. We collected 207 hours of public speech data from TED talks. We highlight the expected relation between automatic emotion recognition distribution and the common features of a TED talk. We then employed manual annotators to improve the quality of the annotations, building a two-level annotation process where to a main emotion label we add multiple secondary emotion descriptors. Additional annotations were also obtained through crowdsourcing, and we provide an analysis of the agreement between multiple annotators. We conducted a comparison between the automatic and manual labeling, obtaining an average accuracy of 28.4% of the automatic multiclass annotation API, as well as automatic classification with a CNN, reaching more than 60% average accuracy in various experimental settings. We also discuss various active learning methods we are using to select the samples to be annotated in order to obtain more relevant data at a faster pace. A large speech emotion detection corpus will enable more accurate emotion detection systems, which can then be integrated into dialog systems to recognize and react to the user emotion.

Stefanie Dipper. Creating and processing historical corpora: spelling variation and other challenges

In my talk, I want to address selected aspects of creating and analyzing historical corpora. In particular, I will talk about difficult cases of morphological and POS tagging. The focus of the talk will be on spelling variation and ways of normalizing the data. I will discuss how the variation can be used for regional and temporal classification.

David Jurgens. Observing cultural exchange across linguistic and geographic boundaries in social media

Language differences often form communication barriers between social groups. However, multilingual individuals can act as cultural bridges by sharing content from one language to another. These individuals serve a critical communication role for social groups such as diaspora and linguistic minorities whose primary language is not the language spoken around them. We ask how these groups adopt and share other-language content across linguistic and geographic boundaries. In this talk, I introduce our new methods for jointly addressing the problems of location inference and language detection across hundreds of languages and in cases where the text contains code-switching between multiple languages. In addition, I will also discuss the challenges in detecting code-switched text and in identifying speakers of infrequent languages in social media. Then, I describe our study of inter-lingual exchanges across tens of millions of users across the globe. Our results reveal pattern in cultural adoption and integration, suggesting regularities in the nature and process of information flows across community and language boundaries.

Chris Dyer. User-generated text: opportunities and models

User-generated text offers challenges and unique opportunities in the study of language that are obscured in the edited genres that have been the primary focus of research in computational linguistics. The content is arguably closer to language as it is spoken (evidence for this proposition is the frequency of non-prestige dialect forms, orthographic variants that encode pronunciation, and code switching), but it retains the computational convenience of a densely encoded discrete form. In this talk, I review work by my group that has exploited this to mine translations of colloquial language from publicly available social media. However, such content challenges some of the basic modeling assumptions that we make: in particular, that a word's identity is determined by its orthographic form. To deal with these changes, we have developed several neural-net–based models that treat word representation as a function of orthography. Results in language modeling, machine translation, part of speech tagging, and syntactic parsing show that despite the complexity of the relationship between "form" and "function" at the lexical level, it is learnable by the function classes we use.

Organizers

Contact: Özlem Çetinoğlu (ozlem@ims.uni-stuttgart)

Organizers: Özlem Çetinoğlu (IMS), Detmar Meurers (Tübingen), Kyle Richardson (IMS), Christian Scheible (IMS)

Supported by Deutsche Forschungsgemeinschaft (DFG) via project SFB 732, Incremental Specification in Context.

Maps

The Google map below shows the location of all places, please click the button on the top left to see more information. The second campus map shows the location of the IMS.